What is a Deepfake and How Does it Work?

A deepfake is a sophisticated digital manipulation combining “deep learning” and “fake.” Using artificial intelligence (AI), deepfakes alter images, videos, and audio to create content that looks and sounds real—but isn’t. But why are deepfakes created, and how does this technology actually work?

Deepfakes rely on neural networks, AI systems modeled after the human brain. When trained with large datasets of images, videos, or audio, these networks learn patterns and generate highly realistic manipulations. One of the most powerful neural networks used is the Generative Adversarial Network (GAN).

A GAN consists of two algorithms: one creates the fake (the forger), and the other tries to detect it (the investigator). Through continuous iterations, the forger improves, making the content increasingly realistic—a process called deep learning.

Different Types of Deepfakes

Deepfakes come in several forms:

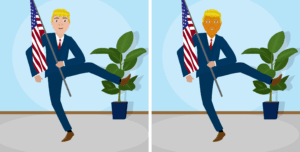

- Face-swapping: Replacing one person’s face with another in images or videos, often used for entertainment or impersonation.

- Voice swapping: Manipulating audio to mimic someone’s voice. Advanced versions sync lip and facial movements for realism.

- Body puppetry: Capturing and replicating body movements in real-time to imitate a person’s gestures or actions.

Why Are Deepfakes Dangerous?

Deepfakes can pose serious risks. Initially exploited in 2017 to create non-consensual adult content—mostly targeting women—the volume of deepfake files has since skyrocketed. From 500,000 in 2023 to an estimated 8 million by 2025, these AI-manipulated media files now pose unprecedented threats to both businesses and individuals, making detection and mitigation more critical than ever.

As AI advances, the challenge isn’t just the technology—it’s the lack of detection tools. Even competitions using massive datasets and multiple algorithms struggle to detect deepfakes with more than a 65% success rate. This makes cybersecurity awareness and proactive monitoring essential.

Real-World Examples of Deepfake Misuse

Deepfakes have had tangible consequences worldwide:

- Gabon, 2018: A manipulated video of President Ali Bongo sparked political unrest and an attempted coup.

- Emma González case: Opponents altered a survivor’s speech video to misrepresent her message.

- Nancy Pelosi: A video was edited to make the Speaker appear confused, gaining millions of views despite being false.

- Rana Ayyub: A journalist was targeted with a fake pornographic video to damage her credibility.

Popular Deepfake Creation Tools

- DeepFaceLab: The most widely used open-source software for face-swapping and facial manipulation (Windows & Linux).

- Zao: Chinese smartphone app for instant deepfake videos, primarily for entertainment (Android & iOS, China-only).

- FaceApp: Alters age, gender, or adds features like tattoos or makeup (Android & iOS). Users must consent to image rights being shared.

- Avatarify: Creates live deepfakes for video calls, mimicking facial movements in real-time (Windows, Mac, Linux, iOS).

How to Spot a Deepfake

Identifying deepfakes can be tricky. Check context carefully, and look for common signs highlighted by the FBI:

- Visual distortions, blurring, or inconsistent lighting

- Unnatural eye placement or facial asymmetry

- Odd head or body movements

- Lip sync issues between voice and facial movements

- Blurred or indistinct backgrounds

- Artifacts in pupils or earlobes

Positive Applications of Deepfake Technology

Not all deepfakes are harmful. In entertainment, AI has recreated young Luke Skywalker in The Mandalorian, and Disney plans to use Disney Megapixel deepfakes for new films. In education, Synthesia creates AI-driven e-learning videos using realistic avatars. Researchers have even animated the Mona Lisa, showcasing creative and ethical uses of deepfake AI.

Protecting Against Deepfakes: Cybersecurity Awareness

Educating staff equips employees with the skills to detect and respond to manipulations, safeguarding both the organisation and digital integrity. Proactive training reduces risks from malicious deepfakes, strengthens defenses, and empowers employees to act as vigilant guardians of authenticity. Learn more about safeguarding your organisation from AI-driven threats with the Human Risk Management Platform, featuring Automated Security Awareness, Advanced Phishing Simulations, Risk Intelligence & Analytics, and Compliance Management.

FAQ: Deepfakes Explained

What is a deepfake?

A deepfake is an AI-generated audio, image, or video that manipulates reality to make people appear to say or do things they never did. It’s created using advanced machine learning techniques like neural networks and GANs.

Are deepfakes illegal?

It depends on use. Non-consensual content or fraud can be criminal, while entertainment uses may be legal.

How can I detect a deepfake?

Look for visual distortions, unnatural facial movements, lip-sync issues, or inconsistent backgrounds.

Can AI always detect deepfakes?

No. Even advanced AI struggles to detect highly realistic deepfakes consistently.

Are deepfakes only used for negative purposes?

No. They are used in movies, marketing, e-learning, and creative projects ethically.

How can I protect my organisation from deepfakes?

Train staff in cybersecurity awareness and implement monitoring tools to detect suspicious content.